This keynote from the NIPS 2017 conference by Kate Crawford is excellent – a good resource for anyone interested in thinking and communicating about the issues of bias in AI.

Two excellent reflections on ethics in relation to Google’s AI principles

Two excellent pieces by Anab Jain and Lucy Suchman that I recommend you read if you’re interested in studying technology (not just AI) that reflect upon Google’s announcement of its ‘AI principles’ and its apparent commitment not to work on the US Government’s “project Maven”.

Here’s a couple of quotes that stood out, but you should definitely read both pieces:

Corporate Accountability – Lucy Suchman

The principle “Be accountable to people,” states “We will design AI systems that provide appropriate opportunities for feedback, relevant explanations, and appeal. Our AI technologies will be subject to appropriate human direction and control.” This is a key objective but how, realistically, will this promise be implemented? As worded, it implicitly acknowledges a series of complex and unsolved problems: the increasing opacity of algorithmic operations, the absence of due process for those who are adversely affected, and the increasing threat that automation will translate into autonomy, in the sense of technologies that operate in ways that matter without provision for human judgment or accountability. […]

Tackling the Ethical Challenges of Slippery Technology – Anab Jain

The overriding question for all of these principles, in the end, concerns the processes through which their meaning and adherence to them will be adjudicated. It’s here that Google’s own status as a private corporation, but one now a giant operating in the context of wider economic and political orders, needs to be brought forward from the subtext and subject to more explicit debate.

Given the networked nature of the technologies that companies like Google create, and the marketplace of growth and progress that they operate within, how can they control who will benefit and who will lose? What might be the implications of powerful companies taking an overt moral or political position? How might they comprehend the complex possibilities for applications of their products? […]

How many unintended consequences can we think of? And what happens when we do release something potentially problematic out into the world? How much funding can be put into fixing it? And what if it can’t be fixed? Do we still ship it? What happens to our business if we don’t? All of this would mean slowing down the trajectory of growth, it would mean deferring decision-making, and that does not rank high in performance metrics. It might even require moving away from the selection of The Particular Future in which the organisation is currently finding success.

Ocado automated fulfilment centre & automation promo videos

Ocado, online groceries delivery service in the UK, some time ago pivoted into providing automation solutions for other retailers. They have a division, or separate company, called Ocado Technology, which offers other retailers systems for fulfilment centre automation: “Ocado Smart Platform” [buzzwords ahoy!]. So, while the above video is interesting, insofar as it demonstrates yet another not-exactly-hospitable factory-like code/space, what is perhaps more interesting is that this system seems to have been developed not only to fulfil Ocado orders in Wiltshire/Hampshire but also as an advert for what Ocado technology does. See this report from Reuters: Ocado’s robot army courts global food retailers.

Ocado have promoted previous automated systems through videos too. The same claims about ‘machine learning’ and so on were made about a fulfilment centre system that looks a lot more like an airport baggage sorting system around five years ago.

I’ve become mildly obsessed with these videos of automated factories and fulfilment centres – they have become a sort of genre unto themselves. There are clearly ways in which promotional videos–made by the equipment/tech firms responsible for creating the systems or the firm implementing them–get translated into video news stories. Likewise, there are ways of talking about the ‘robot’ nature of these things. All of this demonstrates our ongoing negotiation of norms of understanding, describing and rationalising the technology, it’s implementation and what it may or may not portend for work and so on. If you’ve read this blog in the last year you may remember I talk about this stuff as the formation/formalisation of an ‘automative imagination‘.

As a sort of throw-away aside – the displacement of labour involved by Ocado’s, and others’, systems of automation may well be important too. If you ramp up the capacity to ‘fulfil’ orders in the distribution centre then you need to expand the capacity to get them delivered. We don’t have driverless vehicles on our roads, yet, so this possibly means fewer pickers and packers and a lot more delivery drivers.

Reblog> digital | visual | cultural

Gillian Rose has a new project, which she’s blogged about. It sounds interesting. I was sort of wondering if the analysis of a “specific way of seeing the world through digital visualising technologies emerging” might crossover with Matt Jones’ ‘sensor vernacular‘ and James Bridle‘s, and others’, conceptualisation of a ‘new aesthetic‘…

Lovely that such work is supported by an institution (Oxford). I am sure it will create all sorts of interesting linkages and conversations.

digital | visual | cultural

I’m very excited to announce a new project: Digital | Visual | Cultural. D|V|C is a series of events which will explore how the extensive use of digital visualising technologies creates new ways of seeing the world.

The first event will be on June 28, when Shannon Mattern will give a public lecture in Oxford. Shannon is the author of the brilliant Code and Clay, Data and Dirt as well as lots of great essays for Places Journal. ‘Fifty Eyes on a Scene’ will replay a single urban scene from the perspective of several sets of machinic and creaturely eyes. That lecture will be free to attend but you’ll need to book. Booking opens via the D|V|C website on 23 April. It will also be livestreamed.

I’m working on this with Sterling Mackinnon, and funding is coming from the School of Geography and the Environment, Oxford University, and St John’s College Oxford.

The website has more info at dvcultural.org, and you can follow D|V|C on Twitter @dvcultural and on Instagram at dvcultural. There’ll be a couple more events in 2019 so follow us to stay in touch.

So that’s the practicalities. What’s the logic?

The key questions D|V|C will be asking are: Is a specific way of seeing the world through digital visualising technologies emerging? If so, what are its conditions and consequences?

CFP> ‘The Spectre of Artificial Intelligence’

An interesting CFP for Spheres: Journal of Digital Culture. Heard through CDC Leuphana:

‘The Spectre of Artificial Intelligence‘

Over the last years we have been witnessing a shift in the conception of artificial intelligence, in particular with the explosion in machine learning technologies. These largely hidden systems determine how data is gathered, analyzed, and presented or used for decision-making. The data and how it is handled are not neutral, but full of ambiguity and presumptions, which implies that machine learning algorithms are constantly fed with biases that mirror our everyday culture; what we teach these algorithms ultimately reflects back on us and it is therefore no surprise when artificial neural networks start to classify and discriminate on the basis of race, class and gender. (Blockbuster news regarding that women are being less likely to get well paid job offers shown through recommendation systems, a algorithm which was marking pictures of people of color as gorillas, or the delivery service automatically cutting out neighborhoods in big US cities where mainly African Americans and Hispanics live, show how trends of algorithmic classification can relate to the restructuring of the life chances of individuals and groups in society.) However, classification is an essential component of artificial intelligence, insofar as the whole point of machine learning is to distinguish ‘valuable’ information from a given set of data. By imposing identity on input data, in order to filter, that is to differentiate signals from noise, machine learning algorithms become a highly political issue. The crucial question in relation to machine learning therefore is: how can we systematically classify without being discriminatory?In the next issue of spheres, we want to focus on current discussions around automation, robotics and machine learning, from an explicitly political perspective. Instead of invoking once more the spectre of artificial intelligence – both in its euphoric as well as apocalyptic form – we are interested in tracing human and non-human agency within automated processes, discussing the ethical implications of machine learning, and exploring the ideologies behind the imaginaries of AI. We ask for contributions that deal with new developments in artificial intelligence beyond the idiosyncratic description of specific features (e.g. symbolic versus connectionist AI, supervised versus unsupervised learning) by employing diverse perspectives from around the world, particularly the Global South. To fulfil this objective, we would like to arrange the upcoming issue around three focal points:

- Reflections dealing with theoretical (re-)conceptualisations of what artificial intelligence is and should be. What history do the terms artificiality, intelligence, learning, teaching and training have and what are their hidden assumptions? How can human intelligence and machine intelligence be understood and how is intelligence operationalised within AI? Is machine intelligence merely an enhanced form of pattern recognition? Why do ’human’ prejudices re-emerge in machine learning algorithms, allegedly devised to be blind to them?

- Implications focusing on the making of artificial intelligence. What kind of data analysis and algorithmic classification is being developed and what are its parameters? How do these decisions get made and by whom? How can we hold algorithms accountable? How can we integrate diversity, novelty and serendipity into the machines? How can we filter information out of data without reinserting racist, sexist, and classist beliefs? How is data defined in the context of specific geographies? Who becomes classified as threat according to algorithmic calculations and why?

- Imaginaries revealing the ideas shaping artificial intelligence. How do pop-cultural phenomena reflect the current reconfiguration of human-machine-relations? What can they tell us about the techno-capitalist unconscious? In which way can artistic practices address the current situation? What can we learn from historical examples (e.g. in computer art, gaming, music)? What would a different aesthetic of artificial intelligence look like? How can we make the largely hidden processes of algorithmic filtering visible? How to think of machine learning algorithms beyond accuracy, efficiency, and homophily?

Deadlines

If you would like to submit an article or other, in particular artistic contribution (music, sound, video, etc.) to the issue, please get in touch with the editorial collective (contact details below) as soon as possible. We would be grateful if you would submit a provisional title and short abstract (250 words, max) by 15 May, 2018. We may have questions or suggestions that we raise at this point. Otherwise, final versions of articles and other contributions should please be submitted by 31 August, 2018. They will undergo review in accordance with the peer review process (s. About spheres). Any revisions requested will need to be completed so that the issue can be published in Winter 2018.

Digital work and automation link dump

Ok. Whilst I was not blogging I kept finding things through twitter and reading blogs that I find interesting. I kept thinking “oh, I ought to write something about that”… the thing is, I haven’t had the time and I don’t have the time now. So… I’m going to do a sort of annotated link thingamajig so that I don’t lose these things (I have over 45 tabs open in Chrome on my phone, this is unworkable) and also to share and to maybe invite other people to comment on this stuff..?!

The future of work, a history by Kevin Baker – A relatively long piece of writing that charts a history of American worrying about the replacement of the human worker with machines. Some interesting historical details that help contextualise some of the alarmism today. What I like about this is that the piece ends by reminding us all that there are choices to be made, which are political, but it also highlights how inescapable forms of determinism are (as Sally Wyatt so expertly tells us).

Tech culture, unions and the blind spot of meritocracy by Wendy Liu in Technology and The Worker (#2) –an interesting piece by Wendy Liu that documents a conversation with Xavier Denis a former engineer at Shopify about what working in the US tech. market is like – the sorts of working conditions, norms and management practices.

Automation, skills use and training by Ljubica Nedelkoska and Glenda Quintin (OECD) — A report that builds upon and that authors claim improves assessments of the potential for the automation of particular kinds of work. “Beyond the share of jobs likely to be significantly disrupted by automation of production and services, the accent is put on characteristics of these jobs and the characteristics of the workers who hold them.” This contributes to the ongoing arguments around the likelihood or not of job/role/work automation.

What can machine learning do? Workforce implications by Erik Brynjolfsson (MIT) and Tom Mitchell (Carnegie Mellon) in Science – A short-ish “Insights” article that makes some fairly grandiose and sweeping claims about the ‘impacts’ of automation on the workforce (with an American focus). Some references are made but if you follow them up it seems to me that the statements they are used to support make fairly big leaps. This, in contrast to the OECD report, is a stoking of the now brightly burning fire of the imaginings of automation.

The Guardian view on automation: put human needs first – A Grauniad editorial that passes comment on the above OECD report. It’s interesting to see the reception, interpretation and sometimes misrepresentation (I don’t think that’s what the Graun are doing here) of these academic economic reports. This plays into the general, normative, discussions and senses of the risks and/or realities of automation (this is a part of what I want write a book about).

See how one woman and her team of robots move thousands of boxes in a Chinese warehouse. pic.twitter.com/WsiRC10Uoo

— BBC Click (@BBCClick) April 16, 2018

BBC Click offer a Twitter-length version of ‘what it’s like to work with robots’.

Shynola re-imagine/visualise the opening section of Matthew De Abaitua’s novel Red Men – a PKD-like imaginative exploration of the emergence of AI.

The Incomplete Vision of John Perry Barlow by April Glaser (Slate) – Reflecting upon Barlow’s “Declaration of the independence of cyberspace” and the rise of EFF following his death earlier this year. Fits nicely with Fred Turner’s work examining the hippy-libertarian nexus of Silicon Valley moguls. In many ways this gets at some of the ideological foundations and/or misgivings of automation talk.

Face Recognition Glasses Augment China’s Railway Cops by Bibek Bhandari (SixthTone) – An article about the alleged deployment of facial recognition through AR glasses for Chinese police. Predictable comparisons to “Black Mirror” and other forms of dystopianism.

The Ethics Advisory Group of the European Data Protection Supervisor Report on Data Ethics (2018) [PDF] – On the back of the GDPR and contemporary debates around privacy in light of perennial controversies with Facebook and others the Ethics Advisory Group published a report claiming that: “The objective of this report is thus not to generate definitive answers, nor to articulate new norms for present and future digital societies but to identify and describe the most crucial questions for the urgent conversation to come.” The report is written by J. Peter Burgess, Luciano Floridi, Aurélie Pols and Jeroen van de Hoven.

The network Uber drivers built by Alex Rosenblat – an article that addresses some of the ways that Uber drivers have collectively organised in order to manage working with a quasi-automated system in which the policies are not necessaerily in the best interests of the worker.

Artificial Intelligence Technology Strategy (Report of Strategic Council for AI Technology) ( a Japanese government report) [PDF] – A 25 page report written for the Japanese government. It’s fairly dry and dense and has some crazy Powerpoint-style diagrams but it is sort of interesting as a documenting of an apparently strategic governmental approach to “AI”.

Why the Luddites matter : Librarian Shipwreck – A good blogpost on who the Luddites really were and framing them as an interesting means of getting at how popular understandings of technology might be found wanting.

Sci-Fi doesn’t have to be depressing: welcome to Solar Punk by Tom Cassauwers – What if we don’t write dystopian science fiction but build imaginary worlds based on positive and affirmative uses of technology? That is the premise of what is called ‘Solar Punk’, as outlined here by Tom Cassauwers – who charts the movement with Sarena Ulibarri, an editor who is a key proponent of the sub-genre.

Our driverless future on CNN Money – a video feature on driverless cars with four episodes and a couple of additional videos. There’s some interesting interviews and it’s not all boosterism. It’s sort of emblematic of the current discourse on automation. If nothing else this is probably useful for teaching purposes as there are some clips that nicely sum up some of the key questions being asked.

A reminder: CFP > New Geographies of Automation? RGS-IBG 2018, Cardiff

A friendly reminder and invitation to submit ideas for the below proposed session for the RGS-IBG annual conference in late August in Cardiff.

My aim with this session is to convene a conversation about as wide a range of tropes about automation as possible. Papers needn’t be empirical per se or about actually existing automation, they could equally be about the rationales, promises or visions for automation. Likewise, automation has been about for a while, so historical geographies of automation, in agriculture for example, or policies for automation that have been tried and failed would be also welcome.

There all sorts of ways that ‘automation’ has been packaged in other rubrics, such as ‘smart’ things, cities and so on, or perhaps become a ‘fig leaf’ or ‘red herring’ to cover for unscrupulous activities, such as iniquitous labour practices.

I guess what I’m driving at is – I welcome any and all ideas relevant to the broad theme!

New Geographies of Automation?

Please send submissions (titles, abstracts (250 words) and author details) to me by 31st January 2018.

This session invites papers that respond to the variously promoted or forewarned explosion of automation and the apparent transformations of culture, economy, labour and workplace we are told will ensue. Papers are sought from any and all branches of geography to investigate what contemporary geographies of automation may or should look like, how we are/could/should be doing them and to perhaps question the grandiose rhetoric of alarmism/boosterism of current debates.

Automation has been the recent focus of hyperbolic commentary in print and online. We are warned by some of the ‘rise of the robots’ (Ford 2015) sweeping away whole sectors of employment or by others exhorted to strive towards ‘fully automated luxury communism’ (Srnicek & Williams 2015). Beyond the hyperbole it is possible to trace longer lineages of geographies of automation. Studies of the industrialisation of agriculture (Goodman & Watts 1997); Fordist/post-Fordist systems of production (Harvey 1989); shifts to globalisation (Dicken 1986) and (some) post-industrial societies (Clement & Myles 1994) stand testament to the range of work that has addressed the theme of automation in geography. Indeed, in the last decade geographers have begun to draw out specific geographical contributions to debates surrounding ‘digital’ automation. From a closer attention to labour and workplaces (Bissell & Del Casino 2017) to the interrogation of automation in governance and surveillance across a range of scales (Amoore 2013, Kitchin & Dodge 2011) – the processes and experiences of automation have (again) become a significant concern for geographical research.

The invitation of this session is for papers that consider contemporary discussions, movements and propositions of automation from a geographical perspective (in the broadest sense).

Examples of topics might include (but are certainly not limited to):

- Promises of ‘smart’ and ‘intelligent environments

- Identity, difference and machines

- ‘Algorithmic’ places/spaces

- Activism for/against automation

- Autonomous weapons systems

- Robotics and the everyday

- Techno-bodily relations of automation

- Working with robots

- AI, machine learning and cognitive work

- Automation and bias

- Sovereignty, law-making and automated systems

- Automated governance and policing

- Boosterism and tales of automation

- The economics of automation

- Material cultures of robots

- Mobilities and materialities of ‘driver-less’ vehicles

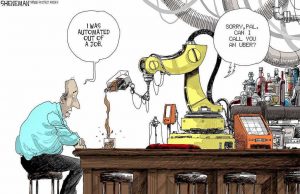

The Guardian of automation

I have been looking back over the links to news articles I’ve been collecting together about automation and I’ve been struck in particular by how the UK newspaper The Guardian has been running at least one story a week concerning automation in the last few months (see their “AI” category for examples, or the list below). Many are spurred from reports and press releases about particular things, so it’s not like they’re unique in pushing these narratives but it is striking, not least because lots of academics (that I follow anyway) share these stories on Twitter and it becomes a self-reinforcing, somewhat dystopian (‘rise of the robots’) narrative. I’m sure that we all adopt appropriate critical distance when reading such things but… there is a sense in which the ‘robots are coming for our jobs’ sort of arguments are being normalised and sedimented without a great deal of public critical reflection.

We might ask in response to the automation taking jobs arguments: who says? (quite often: management consultants and think tanks) and: how do they know? It seems to me that the answers to those questions are pertinent and probably less clear, and so interesting(!), than one might imagine.

Here’s a selection of the Graun’s recent automation coverage:

- Rise of the robots and all the lonely people (13/12/17)

- Don’t like talking to people? Automation will save us from the hellscape of human interaction (13/12/17)

- Robots can set us free and reverse decline, says Labour’s Tom Watson (10/12/17)

- The rise of the robots brings threats and opportunities [letters] (26/11/17)

- Meet your new cobot: is a machine coming for your job? (25/11/17)

- The Guardian view on productivity: the robots are coming [Editorial] (25/11/17)

- Philip Hammond pledges driverless cars by 2021 and warns people to retrain (23/11/17)

- From Peppa Pig to Trump, the web is shaping us. It’s time we fought back (17/11/17)

- Truck drivers like me will soon be replaced by automation. You’re next (17/11/17)

- The machine age is upon us. We must not let it grind society to pieces [by Chuka Amunna MP] (14/11/17)

- Swift action needed to set framework for AI and machine learning [Letter/op-ed] (10/11/17)

Video> ‘AI all around us’ – Hwang talks ethics and governance

On TVO, a public service broadcaster in Ontario, Tim Hwang, Director of the new Ethics and Governance of AI fund, talks about various issues in an accessible way:

UK Government ramping up ‘robotic process automation’

Quite by chance I stumbled across the twitter coverage of a UK Authority event entitled “Return of the Bots” yesterday. There were a range of speakers it seems, from public and private sectors. An interesting element was the snippets about the increasing use of process automation by the UK Government.

Here’s some of the tweets I ‘favourited’ for further investigation (below). I hadn’t quite appreciated where government had got to. It would be interesting to look into the rationale both central and local government are using for RPA – I assume it is cost-driven(?). I hope to follow up some of this…

Chris Hall @cabinetofficeuk – Over 10k robots now at work in 57+ processes @HMRCgovuk#ReturnOfTheBots pic.twitter.com/jRHvbZtzcG

— Helen Olsen Bedford (@helenolsen) November 14, 2017

“Within 3-5 yrs robotics will be commonplace in government” https://t.co/u8YiQLX7Ae

— Helen Olsen Bedford (@helenolsen) November 14, 2017

@cabinetofficeuk & @CapgeminiConsul partnership aims to take 1-2 years off time for UK #publicsector to adopt #robotics process automation https://t.co/OFVOsGNTlL #ReturnoftheBots pic.twitter.com/TcpnGJKacK

— UKAuthority (@UKAuthority) November 15, 2017

What’s the potential of #RPA in government? #ReturnOfTheBots pic.twitter.com/mFip6tbkTf

— Marcus Ferbrache (@marcusferbrache) November 14, 2017

Highlight 1. Prof Birgitte Andersen @BigInnovCentre suggests we should be thinking about ‘the new rules, norms and standards for delivering public services with regards to AI’ #AI #returnofthebots @UKAuthority pic.twitter.com/E8njgSa5aS

— Jon Robertson (@JonSRobertson) November 14, 2017